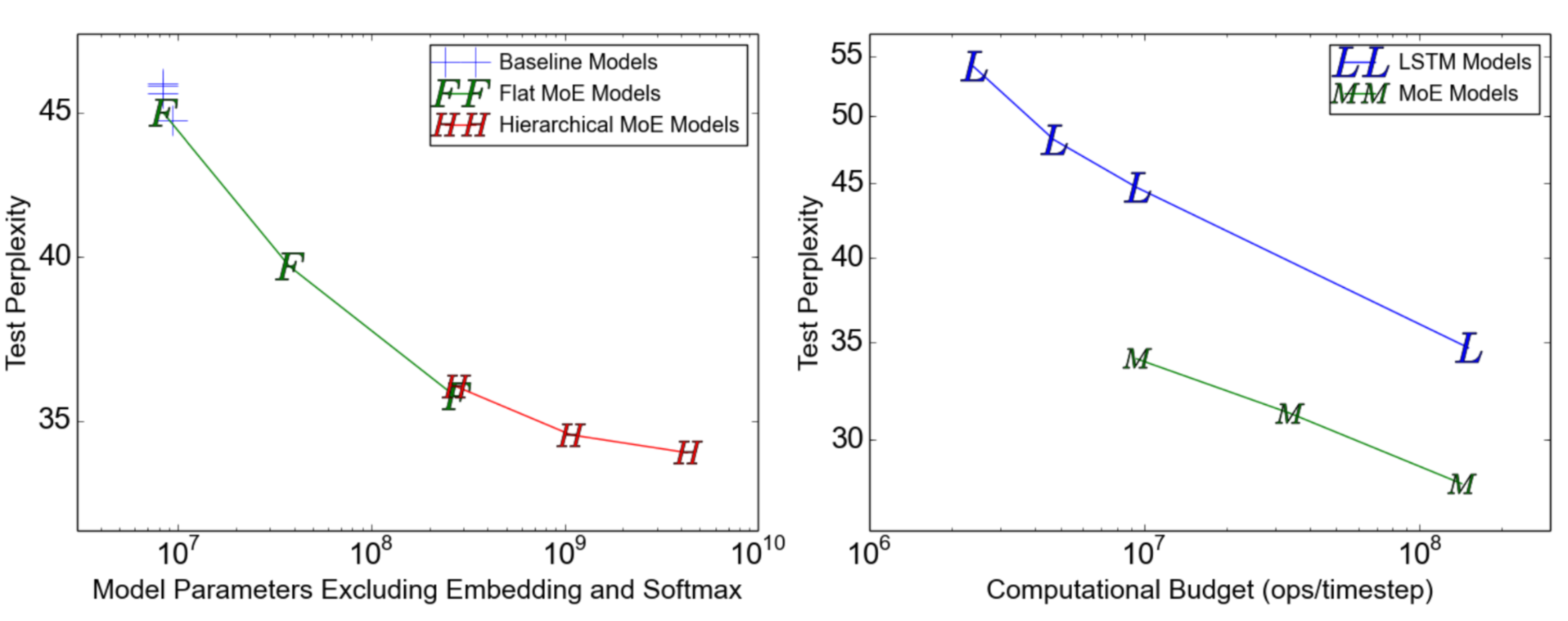

Number of parameters and GPU memory usage of different networks. Memory... | Download Scientific Diagram

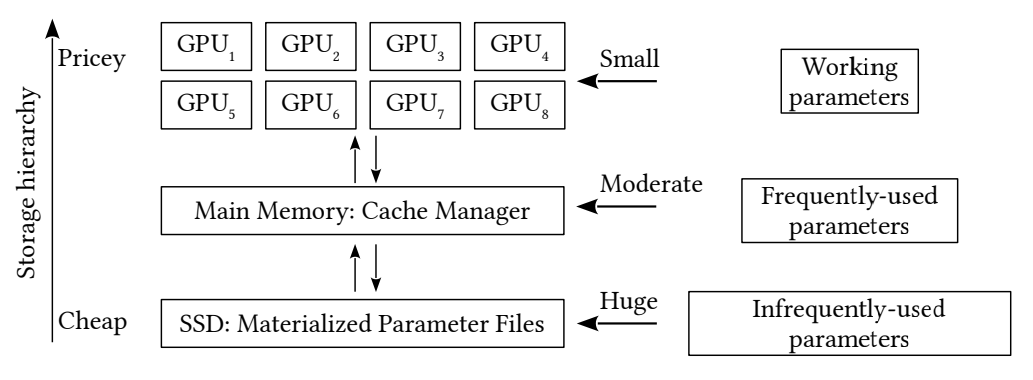

NVIDIA, Stanford & Microsoft Propose Efficient Trillion-Parameter Language Model Training on GPU Clusters | Synced

ZeRO & DeepSpeed: New system optimizations enable training models with over 100 billion parameters - Microsoft Research